Introduction

How is video recorded and stored?

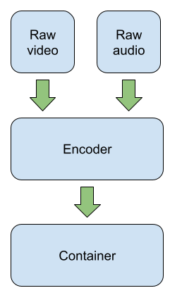

The videos that we watch on YouTube or TV are made up two components – video & audio. Capturing them is quite straightforward in theory – you simply record at a number of frames per second, encoding the data into a stream of bytes (usually a frame rate of between 24 and 50 depending on your needs) and record and encode the audio separately.

Then you combine use a codec (Coder / Decoder) to encode these separate recordings into a single container. When the recording is played back the audio and video playback are each stream of data is passed to their respective devices – the audio stream is passed to the sound card and the video stream is passed to the video/graphics card and the playback is synced.

The problem of course is that storing such a large number of frames of video becomes a huge problem and as a result the video (and audio) needs to the be compressed – this is done by the codec as part of the encoding process as seen later on. Depending on which codec is used, and therefore which compression algorithm, the end video file will vary in file size, quality and playback device compatibility.

Further Reading: H.264 vs H.265

Common Video Resolutions

Different video cameras support different video resolutions, for example:

- HD 720p – 1280 x 720

- Full HD 1080p – 1920 x 1080

- Quad HD 4K or 2160p – 3840 x 2160

- Ultra HD8K or 4320p – 7680 x 4320

Choosing a video resolution when recording

You could choose to record at the highest quality but there are 3 issues:

- Higher resolutions /FPS require more storage capacity and great transmission bandwidth

- Higher resolutions/FPS will use more battery power in your video camera than a lower resolution /fps

- Most playback devices (TVs, Cinema, Phones) don’t support 8K and you probably wouldn’t notice the difference for most content.

Therefore when recording videos using your phone or a dedicated video camera you need to think about what you are going to be recording (do you need a high FPS to slow down action?) and how your content is going to be viewed (is it going to be on high resolution devices?).

Compression

Video Compression

Why do we need video compression?

Without video compression, video files would be HUGE!!!!

Lets imagine we record a 5 minute movie of a cat playing, uncompressed and recorded at 1080p(1920×1080)

- 1920 x 1080 = 2,073,600 pixels per frame

- Each frame requires 3 bytes(for RGB colour) and standard video cameras record at 24 frames per second.

- 2,073,600 x 24 x 24 = 149 Megabytes per second.

- 149 Megabytes x 300 seconds(5 minutes) = 45Gigabytes (7 DVDs worth of data!)

We haven’t even added on any sound data that we might have recorded with the video.

This obviously is a nightmare. You would only manage to stream 1 minute of cat video on your phone before your data ran out!

Redundancy

Spatial & Temporal Redundancy

Videos compression works by exploiting two key areas – spatial and temporal redundancy.

Spatial Redundancy

Much like in image processing, there is often a close correlation between the contents of a pixel and the contents on neighboring pixels, this is known as spatial redundancy. Not all of the data needs to be kept regarding all of the data, some of it can be discarded or recorded at a lower bit depth.

Temporal Redundancy

When a video is recorded, often the majority of the image from frame to frame actually stays pretty much the same, with only certain areas changing significantly. This means the entire frames, or the large part of each frame can discarded and replaced by portions of the data from the previous frame. Also in video files certain objects within the frame move from location to location, but the object itself doesn’t change in its appearance. In this case a motion vector can be calculated and applied to the contents of the following frame.

Using these techniques allow for huge file size reductions with minimal loss in quality, which is especially important when streaming over mobile networks.

Example – Video Stills

Take a look at the video stills below. These are 2 stills that we taken 1 frame apart. can you tell any differences between them? Open the files in a new tab and see if you can spot any!

Encoding

Progressive vs Interlaced Encoding

Interlaced Encoding

Due to bandwidth issues of sending video over the airwaves many television signals are encoded using interlaced encoding. The videos are recorded at 50 frames per second, but each time a frame is transmitted only every other line within a frame is transmitted, odd line numbers one frame, even the next. When the television receives the signal the chopped up (interlaced) frames need to be combined together and then deinterlaced before being displayed.

Progressive Encoding

Modern digital recording and streaming systems don’t use interlaced video signals. There is enough bandwidth to send the full frame through as the recordings have been compressed.

When you see 1080p on a video it means that it has been recorded using progressive scan encoding.

When you see 1080p on a TV it means that it is capable of natively playing back progressively encoded videos. It is also able play back interlaced video, though it needs to perform deinterlacing on the video in order remove the interlacing artifacts.

Bit Rate

Bit Rate

When compressing, recording or streaming a video you are often given a choice of different bit rates. Bit rates refers to the number of bits required for each second of video records / transmitted. For example a standard 1080p video will record at a bit rate of 8Mb/s – for every 1 second of video/audio 8 millions bits of data storage is required.

Distribution

Distributing Video Content

Broadcasting

Originally most video was broadcast using radio waves and everybody received the same video feed – hence the term broadcast. Because of the limited spectrum available the number of television channels in an area was limited.

This lead to the development of cable and satellite television services which meant that a much larger number of television channels could be broadcast. These serves were still all broadcast to every cable/satellite subscriber which is why is was relatively easy to hack satellite/cable feeds and get free cable.

More recently standard television broadcast switched from analogue to digital which allowed a far higher number of channels together with a higher resolution of broadcasts to normal television sets. This higher quality meant that some long running television shows had to completely redesign their sets as viewers would spot details that they previously would not have noticed.

VHS vs DVD vs BluRay

from the 1980s onwards video began to be distributed more widely using different physical media.

- 1980s to 1990s – VHS 480 x 330

- 1990s – 2000s – DVD 720 x 480

- 2000s onwards – Blu Ray 1920 x1080 (higher for some content)

Streaming

Within the last 10 years streaming has almost completely overtaken Blu Ray for the distribution of video content, largely due to the convenience of streaming of video over buying DVDs / Blue Ray. Videos are encoded at multiple frame rates and resolutions so that the quality and reliability of playback can be maximized for each device.

This shift has lead to a number of businesses going bust, most famously Blockbuster video.

When you upload a video to a streaming site such as YouTube it is converted from a single source resolution into multiple resolutions for optimum playback on different devices and internet speeds. This process is known as transcoding.

Digital Rights Management

Piracy is a major concern with all forms of video content and so companies use a variety of techniques to stop or limit piracy. The umbrella term for this is digital rights management.

Techniques include:

Restricting media to only playback on certain devices / regions (DVDs have region codes)

Restricting downloaded media to only play when the device is connected to the internet, in order to validate the license.

Restricting media to only play using certain software and streaming plugins

Time limiting content so that it will only play back within a certain time frame.

Installing software that stops DVDs from being illegally copied.

Resources

Resources

Video Encoding & Compression Online Crossword

Past Paper Questions

- Interlacing – S19 Paper 11 – Qn6

- Progressive Encoding – N18 Paper 11 Qn 1d/e

- Spatial & temporal redundancy – N16 12 Qn 2

- Nov 19 Paper 11 – Qn5