Images

Image Compression

Why do we need image compression?

Let’s take the image above. This image is a relatively small 2 Megapixel image, with dimensions of 2133 x 974 pixels.

This image is a 24 bit RGB image, meaning that it’s file size should be:

2133 x 974 x 24 = 49.8 Megabits

Divide by 8 to get Megabytes = 6.2 Megabytes

This massive file would take about 15 seconds to send to a friend, if you had an excellent phone connection speed – if you were to take and send a full size uncompressed 20 Megapixel photo (that modern smartphones take) it would take at least a minute!

The file above has been compressed slightly using a lossy compression algorithm and is only 500 KB (12x smaller!)

How does it work?

Slight changes in color are not perceived well by the human eye, while slight changes in intensity (light and dark) are. Therefore JPEG’s lossy encoding tends to be more frugal with the gray-scale part of an image and to be more frivolous with the color.

Audio

Audio Compression

Why audio compression?

Most CD quality audio data is recorded at a sampling rate of 44,100Hz (samples per second) and a sampling resolution of 16 Bits per sample.

Raw audio size = 44,100Hz x 2 (16 bits = 2 bytes)

Raw audio size = 0.7 Megabytes (5.6 Megabits per second).

While modern 4G/5G data streams can cope with this draw, if your mobile data allowance was 10GB per month then:

10,000,000,000 / 700,000 = 14285 seconds = 238 minutes = 3.96 Hours

you would use up your entire month’s data allowance in less than 4 hours of listening to Spotify!

This is clearly no good!

Most high quality lossy compression algorithms allow you to reduce the file size to 128 Kb per seconds, a massive 97% reduction from the 5.6 Megabits per second above!

How does it work?

Lossy Audio compression uses learning from Psychoacoustics(the study of the psychology of sound) to apply compression of audio files. Examples include:

- Humans can only hear sounds between certain frequencies, so extremely high or low frequencies can be discarded, or reduced in the number of bits used to describe them.

- Humans can only detect pitch changes great than a certain amount and therefore smaller changes can be discarded or describe in less detail.

- Audio masking means that some sounds can’t be perceived when in the presence of another sound and therefore can be discarded.

Constant Bit Rate (CBR) vs Variable Bit Rate(VBR)

One major compression technique used is Variable Bit Rate. This is where each section of audio is recorded using a different bit rate, depending on the nature and complexity of the audio wave at that point, higher bit rates for detailed and human ear sensitive parts, lower bit rates for other parts, however that average bit rate for the file still stays at the overall bit rate.

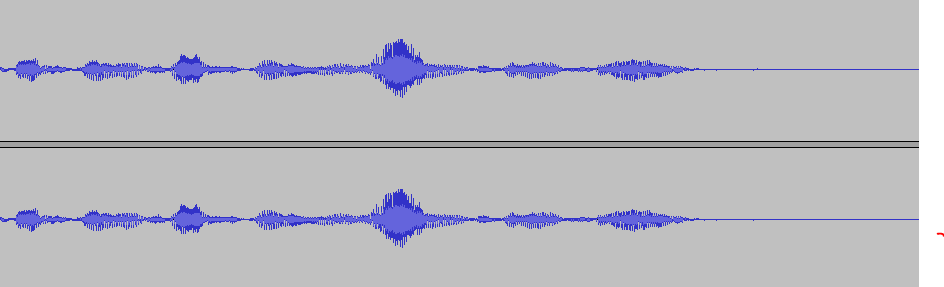

Can you guess which areas of this audio wave might need less bits per second and which might need more?

Video

Video Compression

Why video compression?

Without video compression, video files would be HUGE!!!!

Lets imagine we record a 5 minute movie of a cat playing, uncompressed and recorded at 1080p(1920×1080)

- 1920 x 1080 = 2,073,600 pixels per frame

- Each frame requires 3 bytes(for RGB colour) and standard video cameras record at 24 frames per second.

- 2,073,600 x 24 x 24 = 149 Megabytes per second.

- 149 Megabytes x 300 seconds(5 minutes) = 45Gigabytes (7 DVDs worth of data!)

We haven’t even added on any sound data that we might have recorded with the video.

This obviously is a nightmare. You would only manage to stream 1 minute of cat video on your phone before your data ran out!

How does it work?

Videos compression works using a number of different techniques.

Spacial Redundancy

Much like in image processing, there is often a close correlation between the contents of a pixel and the contents on neighboring pixels, this is known as spacial redundancy. Not all of the data needs to be kept regarding all of the data, some of it can be discarded or recorded at a lower bit depth.

Temporal Redundancy

When a video is recorded, often the majority of the image from frame to frame actually stays pretty much the same, with only certain areas changing significantly. This means the entire frames, or the large part of each frame can discarded and replaced by portions of the data from the previous frame. Also in video files certain objects within the frame move from location to location, but the object itself doesn’t change in its appearance. In this case a motion vector can be calculated and applied to the contents of the following frame.

Using these techniques allow for huge file size reductions with minimal loss in quality, which is especially important when streaming over mobile networks.

Example – Video Stills

Take a look at the video stills below. These are 2 stills that we taken 1 frame apart. can you tell any differences between them? Open the files in a new tab and see if you can spot any!

Lossless Compression

Lossless Compression

Lossless compression is where the file size is reduced without affecting the integrity of the data being compressed. Given a compressed file you should be able to reverse the compression process to and be left with the original data.

Lossless compression is useful where absolute integrity of the data is required, for instance:

- A photographer taking professional photos where image editing and enhancement is going to performed.

- Where photo enlargement is going to needed.

- When recording a band’s album in a studio

- Astronomical photography, where the source image might only contain tiny variations of luminescence/color.

Lossless encoding compression varies on the data being stored but will often result in compression between 15% and 50%.

Common lossless compression algorithms include Run-Length Encoding and Huffman Tree encoding.

Lossy Compression

Lossy Compression

Lossy Compression algorithms take advantage of the limitations of human being’s ability to see and hear by removing unnecessary detail that we would not notice as a human being. Different algorithms are used depending on the type of data that is being compressed.

Depending on the type of algorithm used, the nature of the data, and the amount of loss of quality is deemed acceptable lossless compression can result in compression of over 85%.

Compression Artifacts

Lossy compression, especially heavily compressed media can result in the appearance of noticeable visual or audio artifacts with the data.

Heavily compressed images will have noticeable defects when heavily compressed and heavily compressed audio files will result in ‘tinny’ sounding music files.

.png)

Example of heavy Jpeg image compression. Notice the blocky artefacts in the image? Source – Wikipedia

Encoding

Encoding Techniques

Both lossless and lossy compression using encoding techniques as the final step in the compression process. The two encoding techniques that you need to be aware of are Run Length Encoding (RLE) and Huffman Encoding.

Run Length Encoding (RLE)

Huffman Encoding

Tom Scott’s Video (watch first)

Once you got the basics watch this video for slower detail…

Resources

Past Paper Questions

Interlacing – S19 Paper 11 – Qn6

Progressive Encoding – N18 Paper 11 Qn 1d/e

Spatial & temporal redundancy – N16 12 Qn 2